如何使用预构建的评估器

LangSmith 与开源集成openevals包

提供一套预构建的现成计算器,您可以立即将其用作评估的起点。

注意

本操作指南将演示如何设置和运行一种类型的评估员 (LLM-as-a-judge),但还有许多其他可用的评估员。 请参阅 openevals 和 agentevals repos 以获取包含使用示例的完整列表。

设置

您需要安装openevals包来使用预构建的 LLM as-a-judge 评估器。

- 蟒

- TypeScript (类型脚本)

pip install -U openevals

yarn add openevals @langchain/core

您还需要将 OpenAI API 密钥设置为环境变量,但您也可以选择不同的提供商:

export OPENAI_API_KEY="your_openai_api_key"

我们还将使用 LangSmith 的 Python pytest 集成和TypeScript 的 Vitest/Jest 来运行我们的评估。openevals还与evaluate方法。

有关设置说明,请参阅相应的指南。

运行赋值器

一般流程很简单:从openevals,然后在

测试文件。LangSmith 会自动将评估员的结果记录为反馈。

请注意,并非所有赋值器都需要每个参数(例如,完全匹配赋值器只需要 output 和 reference outputs)。 此外,如果您的 LLM-as-a-judge 提示需要其他变量,则将它们作为 kwargs 传入会将它们格式化到提示中。

按如下方式设置测试文件:

- 蟒

- TypeScript (类型脚本)

import pytest

from langsmith import testing as t

from openevals.llm import create_llm_as_judge

from openevals.prompts import CORRECTNESS_PROMPT

correctness_evaluator = create_llm_as_judge(

prompt=CORRECTNESS_PROMPT,

feedback_key="correctness",

model="openai:o3-mini",

)

# Mock standin for your application

def my_llm_app(inputs: dict) -> str:

return "Doodads have increased in price by 10% in the past year."

@pytest.mark.langsmith

def test_correctness():

inputs = "How much has the price of doodads changed in the past year?"

reference_outputs = "The price of doodads has decreased by 50% in the past year."

outputs = my_llm_app(inputs)

t.log_inputs({"question": inputs})

t.log_outputs({"answer": outputs})

t.log_reference_outputs({"answer": reference_outputs})

correctness_evaluator(

inputs=inputs,

outputs=outputs,

reference_outputs=reference_outputs

)

import * as ls from "langsmith/vitest";

// import * as ls from "langsmith/jest";

import { createLLMAsJudge, CORRECTNESS_PROMPT } from "openevals";

const correctnessEvaluator = createLLMAsJudge({

prompt: CORRECTNESS_PROMPT,

feedbackKey: "correctness",

model: "openai:o3-mini",

});

// Mock standin for your application

const myLLMApp = async (_inputs: Record<string, unknown>) => {

return "Doodads have increased in price by 10% in the past year.";

}

ls.describe("Correctness", () => {

ls.test("incorrect answer", {

inputs: {

question: "How much has the price of doodads changed in the past year?"

},

referenceOutputs: {

answer: "The price of doodads has decreased by 50% in the past year."

}

}, async ({ inputs, referenceOutputs }) => {

const outputs = await myLLMApp(inputs);

ls.logOutputs({ answer: outputs });

await correctnessEvaluator({

inputs,

outputs,

referenceOutputs,

});

});

});

这feedback_key/feedbackKeyparameter 将用作实验中反馈的名称。

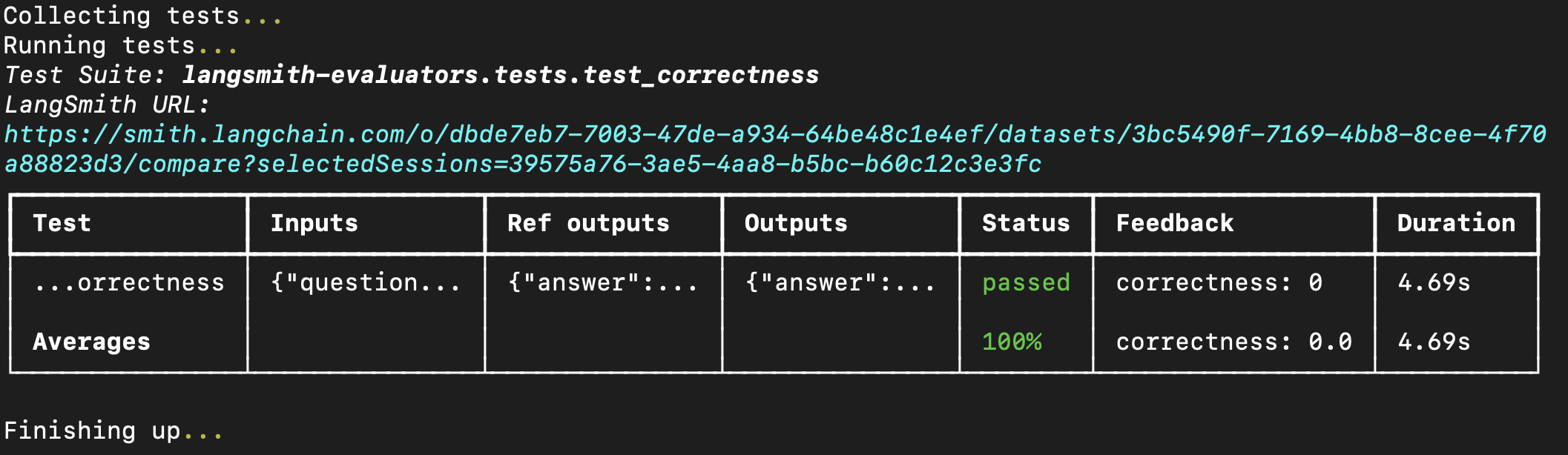

在终端中运行 eval 将导致如下结果:

您还可以将预构建的计算器直接传递到evaluate方法(如果您已在 LangSmith 中创建数据集)。

如果使用 Python,则需要langsmith>=0.3.11:

- 蟒

- TypeScript (类型脚本)

from langsmith import Client

from openevals.llm import create_llm_as_judge

from openevals.prompts import CONCISENESS_PROMPT

client = Client()

conciseness_evaluator = create_llm_as_judge(

prompt=CONCISENESS_PROMPT,

feedback_key="conciseness",

model="openai:o3-mini",

)

experiment_results = client.evaluate(

# This is a dummy target function, replace with your actual LLM-based system

lambda inputs: "What color is the sky?",

data="Sample dataset",

evaluators=[

conciseness_evaluator

]

)

import { evaluate } from "langsmith/evaluation";

import { createLLMAsJudge, CONCISENESS_PROMPT } from "openevals";

const concisenessEvaluator = createLLMAsJudge({

prompt: CONCISENESS_PROMPT,

feedbackKey: "conciseness",

model: "openai:o3-mini",

});

await evaluate(

(inputs) => "What color is the sky?",

{

data: datasetName,

evaluators: [concisenessEvaluator],

}

);

有关可用评估器的完整列表,请参阅 openevals 和 agentevals 存储库。