使用多模态内容运行评估

LangSmith 允许您创建带有文件附件(如图像、音频文件或文档)的数据集示例,以便在评估使用多模态输入或输出的应用程序时可以引用它们。

虽然您可以通过 base64 编码将多模态数据包含在示例中,但这种方法效率低下 - 编码数据比原始二进制文件占用更多的空间,从而导致与 LangSmith 之间的传输速度变慢。改用附件有两个主要好处:

- 由于更高效的二进制文件传输,上传和下载速度更快

- 增强了 LangSmith UI 中不同文件类型的可视化效果

- 开发工具包

- 用户界面

1. 创建带有附件的示例

要使用开发工具包上传带有附件的示例,请使用 create_examples / update_examples Python 方法或 uploadExamplesMultipart / updateExamplesMultipart TypeScript 方法。

- 蟒

- TypeScript (类型脚本)

需要langsmith>=0.3.13

import requests

import uuid

from pathlib import Path

from langsmith import Client

# Publicly available test files

pdf_url = "https://www.w3.org/WAI/ER/tests/xhtml/testfiles/resources/pdf/dummy.pdf"

wav_url = "https://openaiassets.blob.core.windows.net/$web/API/docs/audio/alloy.wav"

img_url = "https://www.w3.org/Graphics/PNG/nurbcup2si.png"

# Fetch the files as bytes

pdf_bytes = requests.get(pdf_url).content

wav_bytes = requests.get(wav_url).content

img_bytes = requests.get(img_url).content

# Create the dataset

ls_client = Client()

dataset_name = "attachment-test-dataset"

dataset = ls_client.create_dataset(

dataset_name=dataset_name,

description="Test dataset for evals with publicly available attachments",

)

inputs = {

"audio_question": "What is in this audio clip?",

"image_question": "What is in this image?",

}

outputs = {

"audio_answer": "The sun rises in the east and sets in the west. This simple fact has been observed by humans for thousands of years.",

"image_answer": "A mug with a blanket over it.",

}

# Define an example with attachments

example_id = uuid.uuid4()

example = {

"id": example_id,

"inputs": inputs,

"outputs": outputs,

"attachments": {

"my_pdf": {"mime_type": "application/pdf", "data": pdf_bytes},

"my_wav": {"mime_type": "audio/wav", "data": wav_bytes},

"my_img": {"mime_type": "image/png", "data": img_bytes},

# Example of an attachment specified via a local file path:

# "my_local_img": {"mime_type": "image/png", "data": Path(__file__).parent / "my_local_img.png"},

},

}

# Create the example

ls_client.create_examples(

dataset_id=dataset.id,

examples=[example],

# Uncomment this flag if you'd like to upload attachments from local files:

# dangerously_allow_filesystem=True

)

Requires version >= 0.2.13

You can use the uploadExamplesMultipart method to upload examples with attachments.

Note that this is a different method from the standard createExamples method, which currently does not support attachments.

Each attachment requires either a Uint8Array or an ArrayBuffer as the data type.

Uint8Array: Useful for handling binary data directly.ArrayBuffer: Represents fixed-length binary data, which can be converted toUint8Arrayas needed.

Note that you cannot directly pass in a file path in the TypeScript SDK, as accessing local files is not supported in all runtime environments.

import { Client } from "langsmith";

import { v4 as uuid4 } from "uuid";

// Publicly available test files

const pdfUrl = "https://www.w3.org/WAI/ER/tests/xhtml/testfiles/resources/pdf/dummy.pdf";

const wavUrl = "https://openaiassets.blob.core.windows.net/$web/API/docs/audio/alloy.wav";

const pngUrl = "https://www.w3.org/Graphics/PNG/nurbcup2si.png";

// Helper function to fetch file as ArrayBuffer

async function fetchArrayBuffer(url: string): Promise<ArrayBuffer> {

const response = await fetch(url);

if (!response.ok) {

throw new Error(`Failed to fetch ${url}: ${response.statusText}`);

}

return response.arrayBuffer();

}

// Fetch files as ArrayBuffer

const pdfArrayBuffer = await fetchArrayBuffer(pdfUrl);

const wavArrayBuffer = await fetchArrayBuffer(wavUrl);

const pngArrayBuffer = await fetchArrayBuffer(pngUrl);

// Create the LangSmith client (Ensure LANGSMITH_API_KEY is set in env)

const langsmithClient = new Client();

// Create a unique dataset name

const datasetName = "attachment-test-dataset:" + uuid4().substring(0, 8);

// Create the dataset

const dataset = await langsmithClient.createDataset(datasetName, {

description: "Test dataset for evals with publicly available attachments",

});

// Define the example with attachments

const exampleId = uuid4();

const example = {

id: exampleId,

inputs: {

audio_question: "What is in this audio clip?",

image_question: "What is in this image?",

},

outputs: {

audio_answer: "The sun rises in the east and sets in the west. This simple fact has been observed by humans for thousands of years.",

image_answer: "A mug with a blanket over it.",

},

attachments: {

my_pdf: {

mimeType: "application/pdf",

data: pdfArrayBuffer

},

my_wav: {

mimeType: "audio/wav",

data: wavArrayBuffer

},

my_img: {

mimeType: "image/png",

data: pngArrayBuffer

},

},

};

// Upload the example with attachments to the dataset

await langsmithClient.uploadExamplesMultipart(dataset.id, [example]);

除了作为字节传入外,还可以将附件指定为本地文件的路径。

为此,请传入附件的路径data值并指定 argdangerously_allow_filesystem=True:

client.create_examples(..., dangerously_allow_filesystem=True)

2. 运行评估

定义目标函数

现在,我们有一个包含带有附件的示例的数据集,我们可以定义一个目标函数来运行这些示例。 以下示例仅使用 OpenAI 的 GPT-4o 模型来回答有关图像和音频剪辑的问题。

- 蟒

- TypeScript (类型脚本)

您正在评估的目标函数必须具有两个位置参数,才能使用与示例关联的附件,必须调用第一个参数inputs第二个必须调用attachments.

- 这

inputsargument 是一个字典,其中包含示例的输入数据,不包括附件。 - 这

attachmentsargument 是一个字典,它将附件名称映射到包含预签名 URL、mime_type和文件字节内容的读取器的字典。您可以使用预签名 url 或阅读器来获取文件内容。 attachments 字典中的每个值都是一个具有以下结构的字典:

{

"presigned_url": str,

"mime_type": str,

"reader": BinaryIO

}

from langsmith.wrappers import wrap_openai

import base64

from openai import OpenAI

client = wrap_openai(OpenAI())

# Define target function that uses attachments

def file_qa(inputs, attachments): # Read the audio bytes from the reader and encode them in base64

audio_reader = attachments["my_wav"]["reader"]

audio_b64 = base64.b64encode(audio_reader.read()).decode('utf-8')

audio_completion = client.chat.completions.create(

model="gpt-4o-audio-preview",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": inputs["audio_question"]

},

{

"type": "input_audio",

"input_audio": {

"data": audio_b64,

"format": "wav"

}

}

]

}

]

)

# Most models support taking in an image URL directly in addition to base64 encoded images

# You can pipe the image pre-signed URL directly to the model

image_url = attachments["my_img"]["presigned_url"]

image_completion = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": inputs["image_question"]},

{

"type": "image_url",

"image_url": {

"url": image_url,

},

},

],

}

],

)

return {

"audio_answer": audio_completion.choices[0].message.content,

"image_answer": image_completion.choices[0].message.content,

}

In the TypeScript SDK, the config argument is used to pass in the attachments to the target function if includeAttachments is set to true.

The config will contain attachments which is an object mapping the attachment name to an object of the form:

{

presigned_url: string,

mime_type: string,

}

import OpenAI from "openai";

import { wrapOpenAI } from "langsmith/wrappers";

const client: any = wrapOpenAI(new OpenAI());

async function fileQA(inputs: Record<string, any>, config?: Record<string, any>) {

const presignedUrl = config?.attachments?.["my_wav"]?.presigned_url;

if (!presignedUrl) {

throw new Error("No presigned URL provided for audio.");

}

const response = await fetch(presignedUrl);

if (!response.ok) {

throw new Error(`Failed to fetch audio: ${response.statusText}`);

}

const arrayBuffer = await response.arrayBuffer();

const uint8Array = new Uint8Array(arrayBuffer);

const audioB64 = Buffer.from(uint8Array).toString("base64");

const audioCompletion = await client.chat.completions.create({

model: "gpt-4o-audio-preview",

messages: [

{

role: "user",

content: [

{ type: "text", text: inputs["audio_question"] },

{

type: "input_audio",

input_audio: {

data: audioB64,

format: "wav",

},

},

],

},

],

});

const imageUrl = config?.attachments?.["my_img"]?.presigned_url

const imageCompletion = await client.chat.completions.create({

model: "gpt-4o-mini",

messages: [

{

role: "user",

content: [

{ type: "text", text: inputs["image_question"] },

{

type: "image_url",

image_url: {

url: imageUrl,

},

},

],

},

],

});

return {

audio_answer: audioCompletion.choices[0].message.content,

image_answer: imageCompletion.choices[0].message.content,

};

}

定义自定义赋值器

与上述规则完全相同,用于确定 Evaluation Factor 是否应接收附件。

下面的评估者使用 LLM 来判断推理和答案是否一致。 要了解有关如何定义基于 llm 的计算器的更多信息,请参阅本指南。

- 蟒

- TypeScript (类型脚本)

# Assumes you've installed pydantic

from pydantic import BaseModel

def valid_image_description(outputs: dict, attachments: dict) -> bool:

"""Use an LLM to judge if the image description and images are consistent."""

instructions = """

Does the description of the following image make sense?

Please carefully review the image and the description to determine if the description is valid."""

class Response(BaseModel):

description_is_valid: bool

image_url = attachments["my_img"]["presigned_url"]

response = client.beta.chat.completions.parse(

model="gpt-4o",

messages=[

{

"role": "system",

"content": instructions

},

{

"role": "user",

"content": [

{"type": "image_url", "image_url": {"url": image_url}},

{"type": "text", "text": outputs["image_answer"]}

]

}

],

response_format=Response

)

return response.choices[0].message.parsed.description_is_valid

ls_client.evaluate(

file_qa,

data=dataset_name,

evaluators=[valid_image_description],

)

import { zodResponseFormat } from 'openai/helpers/zod';

import { z } from 'zod';

import { evaluate } from "langsmith/evaluation";

const DescriptionResponse = z.object({

description_is_valid: z.boolean(),

});

async function validImageDescription({

outputs,

attachments,

}: {

outputs?: any;

attachments?: any;

}): Promise<{ key: string; score: boolean}> {

const instructions = `Does the description of the following image make sense?

Please carefully review the image and the description to determine if the description is valid.`;

const imageUrl = attachments?.["my_img"]?.presigned_url

const completion = await client.beta.chat.completions.parse({

model: "gpt-4o",

messages: [

{

role: "system",

content: instructions,

},

{

role: "user",

content: [

{ type: "image_url", image_url: { url: imageUrl } },

{ type: "text", text: outputs?.image_answer },

],

},

],

response_format: zodResponseFormat(DescriptionResponse, 'imageResponse'),

});

const score: boolean = completion.choices[0]?.message?.parsed?.description_is_valid ?? false;

return { key: "valid_image_description", score };

}

const resp = await evaluate(fileQA, {

data: datasetName,

// Need to pass flag to include attachments

includeAttachments: true,

evaluators: [validImageDescription],

client: langsmithClient

});

更新带附件的示例

在上面的代码中,我们展示了如何将带有附件的示例添加到数据集中。 也可以使用 SDK 更新这些相同的示例。

与现有示例一样,当您使用附件更新数据集时,将对数据集进行版本控制。因此,您可以导航到数据集版本历史记录,查看对每个示例所做的更改。 要了解更多信息,请参阅本指南。

在更新包含附件的示例时,您可以通过几种不同的方式更新附件:

- 传入新附件

- 重命名现有附件

- 删除现有附件

请注意:

- 任何未明确重命名或保留的现有附件都将被删除。

- 如果将不存在的附件名称传递给

retain或rename. - 如果相同的附件名称出现在

attachments和attachment_operations领域。

- 蟒

- TypeScript (类型脚本)

example_update = {

"id": example_id,

"attachments": {

# These are net new attachments

"my_new_file": ("text/plain", b"foo bar"),

},

"inputs": inputs,

"outputs": outputs,

# Any attachments not in rename/retain will be deleted.

# In this case, that would be "my_img" if we uploaded it.

"attachments_operations": {

# Retained attachments will stay exactly the same

"retain": ["my_pdf"],

# Renaming attachments preserves the original data

"rename": {

"my_wav": "my_new_wav",

}

},

}

ls_client.update_examples(dataset_id=dataset.id, updates=[example_update])

import { ExampleUpdateWithAttachments } from "langsmith/schemas";

const exampleUpdate: ExampleUpdateWithAttachments = {

id: exampleId,

attachments: {

// These are net new attachments

"my_new_file": {

mimeType: "text/plain",

data: Buffer.from("foo bar")

},

},

attachments_operations: {

// Retained attachments will stay exactly the same

retain: ["my_img"],

// Renaming attachments preserves the original data

rename: {

"my_wav": "my_new_wav",

},

// Any attachments not in rename/retain will be deleted

// In this case, that would be "my_pdf"

},

};

await langsmithClient.updateExamplesMultipart(

dataset.id,

[exampleUpdate],

);

1. Create examples with attachments

You can add examples with attachments to a dataset in a few different ways.

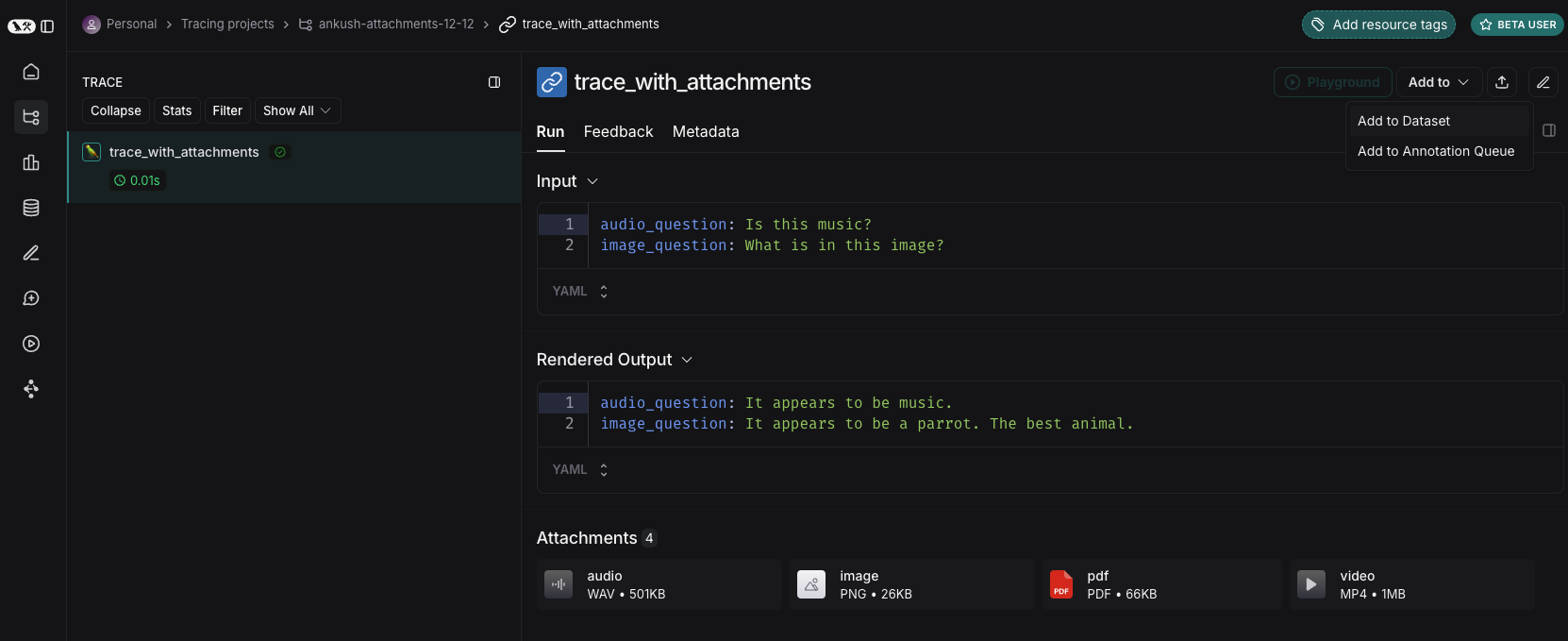

From existing runs

When adding runs to a LangSmith dataset, attachments can be selectively propagated from the source run to the destination example. To learn more, please see this guide.

From scratch

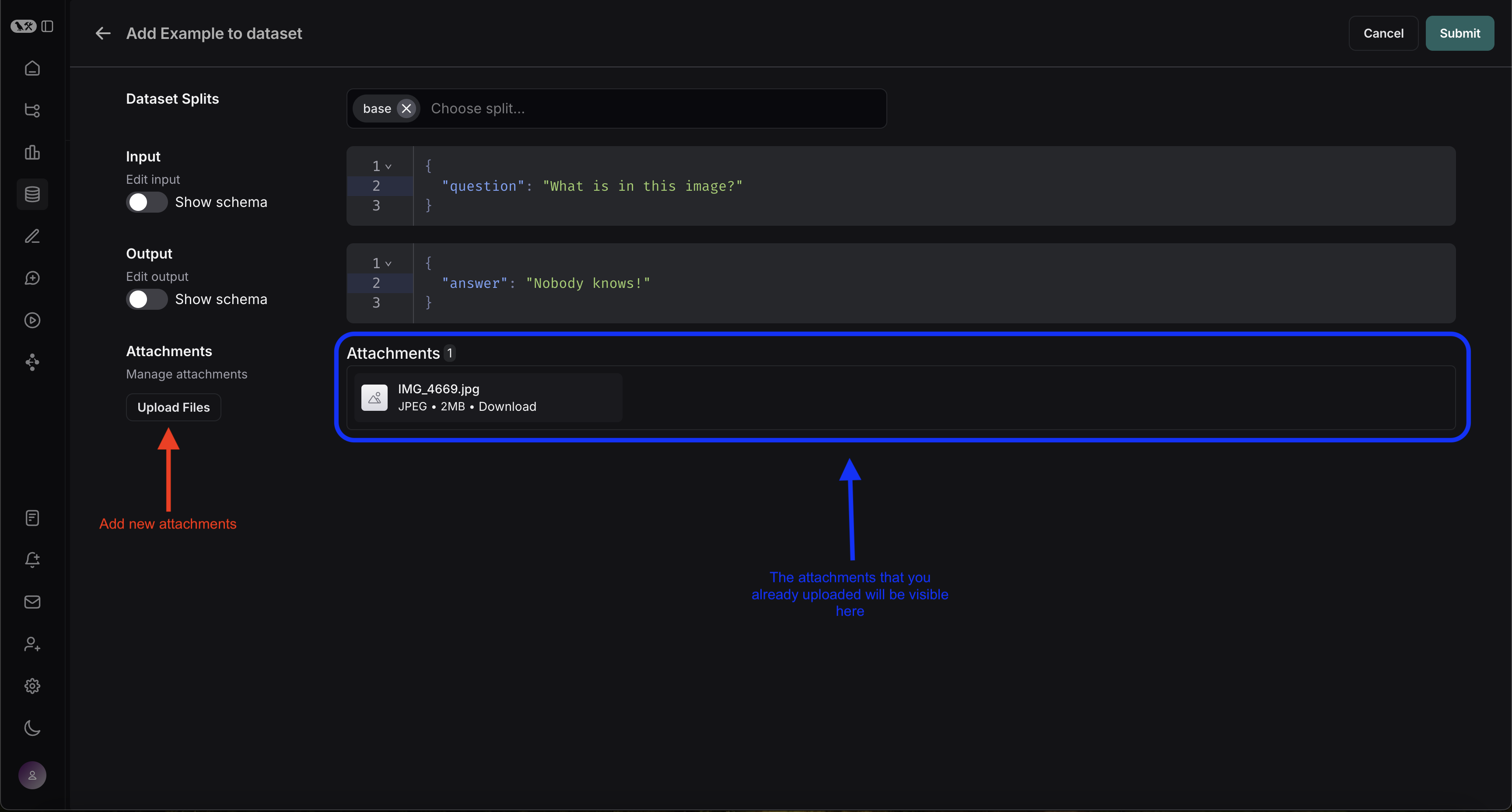

You can create examples with attachments directly from the LangSmith UI. Click the + Example button in the Examples tab of the dataset UI.

Then upload attachments using the "Upload Files" button:

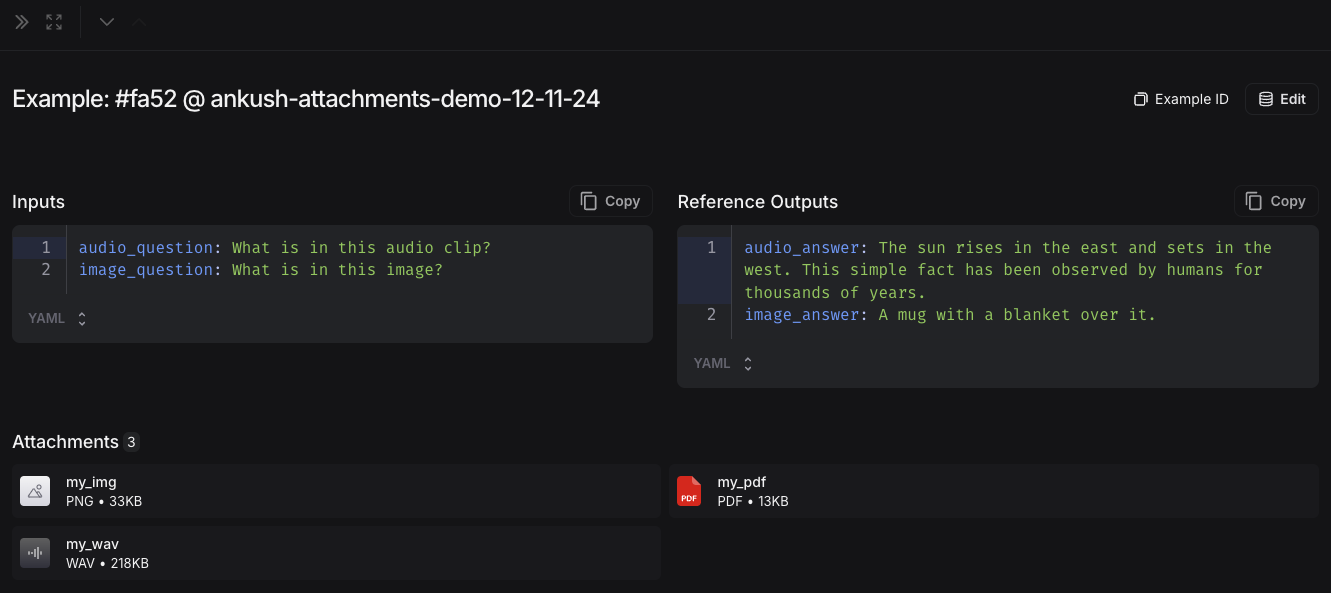

Once uploaded, you can view examples with attachments in the LangSmith UI. Each attachment will be rendered with a preview for easy inspection.

2. Create a multimodal prompt

The LangSmith UI allows you to include attachments in your prompts when evaluating multimodal models:

First, click the file icon in the message where you want to add multimodal content. Next, add a template variable for the attachment(s) you want to include for each example.

- For a single attachment type: Use the suggested variable name. Note: all examples must have an attachment with this name.

- For multiple attachments or if your attachments have varying names from one example to another: Use the

All attachmentsvariable to include all available attachments for each example.

Define custom evaluators

The LangSmith playground does not currently support pulling multimodal content into evaluators. If this would be helpful for your use case, please let us know in the LangChain's community Slack (sign up here if you're not already a member)!

You can evaluate a model's text output by adding an evaluator that takes in the example's inputs and outputs. Even without multimodal support in your evaluators, you can still run text-only evaluations. For example:

- OCR → text correction: Use a vision model to extract text from a document, then evaluate the accuracy of the extracted output.

- Speech-to-text → transcription quality: Use a voice model to transcribe audio to text, then evaluate the transcription against your reference.

For more information on defining custom evaluators, see the LLM as Judge guide.

Update examples with attachments

Attachments are limited to 20MB in size in the UI.

When editing an example in the UI, you can:

- Upload new attachments

- Rename and delete attachments

- Reset attachments to their previous state using the quick reset button

Changes are not saved until you click submit.