使用 OpenTelemetry 进行跟踪

LangSmith 可以接受来自基于 OpenTelemetry 的客户端的跟踪。 本指南将介绍如何实现此目的的示例。

在下面的请求中,为欧盟区域中的自托管安装或组织适当更新 LangSmith URL。对于欧盟区域,请使用eu.api.smith.langchain.com.

使用基本 OpenTelemetry 客户端记录跟踪

第一部分介绍如何使用标准 OpenTelemetry 客户端记录对 LangSmith 的跟踪。

1. 安装

安装 OpenTelemetry SDK、OpenTelemetry 导出器软件包以及 OpenAI 软件包:

pip install openai

pip install opentelemetry-sdk

pip install opentelemetry-exporter-otlp

2. 配置您的环境

为终端节点设置环境变量,替换您的特定值:

OTEL_EXPORTER_OTLP_ENDPOINT=https://api.smith.langchain.com/otel

OTEL_EXPORTER_OTLP_HEADERS="x-api-key=<your langsmith api key>"

可选:指定除 “default” 以外的自定义项目名称

OTEL_EXPORTER_OTLP_ENDPOINT=https://api.smith.langchain.com/otel

OTEL_EXPORTER_OTLP_HEADERS="x-api-key=<your langsmith api key>,Langsmith-Project=<project name>"

3. 记录跟踪记录

此代码设置一个 OTEL 跟踪程序和导出程序,用于将跟踪发送到 LangSmith。然后 调用 OpenAI 并发送所需的 OpenTelemetry 属性。

from openai import OpenAI

from opentelemetry import trace

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import (

BatchSpanProcessor,

)

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

otlp_exporter = OTLPSpanExporter(

timeout=10,

)

trace.set_tracer_provider(TracerProvider())

trace.get_tracer_provider().add_span_processor(

BatchSpanProcessor(otlp_exporter)

)

tracer = trace.get_tracer(__name__)

def call_openai():

model = "gpt-4o-mini"

with tracer.start_as_current_span("call_open_ai") as span:

span.set_attribute("langsmith.span.kind", "LLM")

span.set_attribute("langsmith.metadata.user_id", "user_123")

span.set_attribute("gen_ai.system", "OpenAI")

span.set_attribute("gen_ai.request.model", model)

span.set_attribute("llm.request.type", "chat")

messages = [

{"role": "system", "content": "You are a helpful assistant."},

{

"role": "user",

"content": "Write a haiku about recursion in programming."

}

]

for i, message in enumerate(messages):

span.set_attribute(f"gen_ai.prompt.{i}.content", str(message["content"]))

span.set_attribute(f"gen_ai.prompt.{i}.role", str(message["role"]))

completion = client.chat.completions.create(

model=model,

messages=messages

)

span.set_attribute("gen_ai.response.model", completion.model)

span.set_attribute("gen_ai.completion.0.content", str(completion.choices[0].message.content))

span.set_attribute("gen_ai.completion.0.role", "assistant")

span.set_attribute("gen_ai.usage.prompt_tokens", completion.usage.prompt_tokens)

span.set_attribute("gen_ai.usage.completion_tokens", completion.usage.completion_tokens)

span.set_attribute("gen_ai.usage.total_tokens", completion.usage.total_tokens)

return completion.choices[0].message

if __name__ == "__main__":

call_openai()

您应该会在 LangSmith 控制面板中看到一条跟踪,如下所示。

支持的 OpenTelemetry 属性映射

通过 OpenTelemetry 向 LangSmith 发送跟踪时,以下属性将映射到 LangSmith 字段。

| OpenTelemetry 属性 | LangSmith 球场 | 笔记 |

|---|---|---|

langsmith.trace.name | Run Name | Overrides the span name for the run |

langsmith.span.kind | Run Type | Values: llm, chain, tool, retriever, embedding, prompt, parser |

langsmith.span.id | Run ID | Unique identifier for the span |

langsmith.trace.id | Trace ID | Unique identifier for the trace |

langsmith.span.dotted_order | Dotted Order | Position in the execution tree |

langsmith.span.parent_id | Parent Run ID | ID of the parent span |

langsmith.trace.session_id | Session ID | Session identifier for related traces |

langsmith.trace.session_name | Session Name | Name of the session |

langsmith.span.tags | Tags | Custom tags attached to the span |

gen_ai.system | metadata.ls_provider | The GenAI system (e.g., "openai", "anthropic") |

gen_ai.prompt | inputs | The input prompt sent to the model |

gen_ai.completion | outputs | The output generated by the model |

gen_ai.prompt.{n}.role | inputs.messages[n].role | Role for the nth input message |

gen_ai.prompt.{n}.content | inputs.messages[n].content | Content for the nth input message |

gen_ai.completion.{n}.role | outputs.messages[n].role | Role for the nth output message |

gen_ai.completion.{n}.content | outputs.messages[n].content | Content for the nth output message |

gen_ai.request.model | invocation_params.model | The model name used for the request |

gen_ai.response.model | invocation_params.model | The model name returned in the response |

gen_ai.request.temperature | invocation_params.temperature | Temperature setting |

gen_ai.request.top_p | invocation_params.top_p | Top-p sampling setting |

gen_ai.request.max_tokens | invocation_params.max_tokens | Maximum tokens setting |

gen_ai.request.frequency_penalty | invocation_params.frequency_penalty | Frequency penalty setting |

gen_ai.request.presence_penalty | invocation_params.presence_penalty | Presence penalty setting |

gen_ai.request.seed | invocation_params.seed | Random seed used for generation |

gen_ai.request.stop_sequences | invocation_params.stop | Sequences that stop generation |

gen_ai.request.top_k | invocation_params.top_k | Top-k sampling parameter |

gen_ai.request.encoding_formats | invocation_params.encoding_formats | Output encoding formats |

gen_ai.usage.input_tokens | usage_metadata.input_tokens | Number of input tokens used |

gen_ai.usage.output_tokens | usage_metadata.output_tokens | Number of output tokens used |

gen_ai.usage.total_tokens | usage_metadata.total_tokens | Total number of tokens used |

gen_ai.usage.prompt_tokens | usage_metadata.input_tokens | Number of input tokens used (deprecated) |

gen_ai.usage.completion_tokens | usage_metadata.output_tokens | Number of output tokens used (deprecated) |

input.value | inputs | Full input value, can be string or JSON |

output.value | outputs | Full output value, can be string or JSON |

langsmith.metadata.{key} | metadata.{key} | Custom metadata |

使用 Traceloop SDK 记录跟踪

Traceloop SDK 是兼容 OpenTelemetry 的 SDK,涵盖一系列模型、矢量数据库和框架。 如果此 SDK 涵盖您感兴趣的检测集成,则 可以将此 SDK 与 OpenTelemetry 结合使用,以记录对 LangSmith 的跟踪。

要查看 Traceloop SDK 支持哪些集成,请参阅 Traceloop SDK 文档。

要开始使用,请执行以下步骤:

1. 安装

pip install traceloop-sdk

pip install openai

2. 配置您的环境

设置环境变量:

TRACELOOP_BASE_URL=https://api.smith.langchain.com/otel

TRACELOOP_HEADERS=x-api-key=<your_langsmith_api_key>

可选:指定除 “default” 以外的自定义项目名称

TRACELOOP_HEADERS=x-api-key=<your_langsmith_api_key>,Langsmith-Project=<langsmith_project_name>

3. 初始化 SDK

要使用 SDK,您需要在记录跟踪之前对其进行初始化:

from traceloop.sdk import Traceloop

Traceloop.init()

4. 记录跟踪

以下是使用 OpenAI 聊天补全的完整示例:

import os

from openai import OpenAI

from traceloop.sdk import Traceloop

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

Traceloop.init()

completion = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{

"role": "user",

"content": "Write a haiku about recursion in programming."

}

]

)

print(completion.choices[0].message)

您应该会在 LangSmith 控制面板中看到一条跟踪,如下所示。

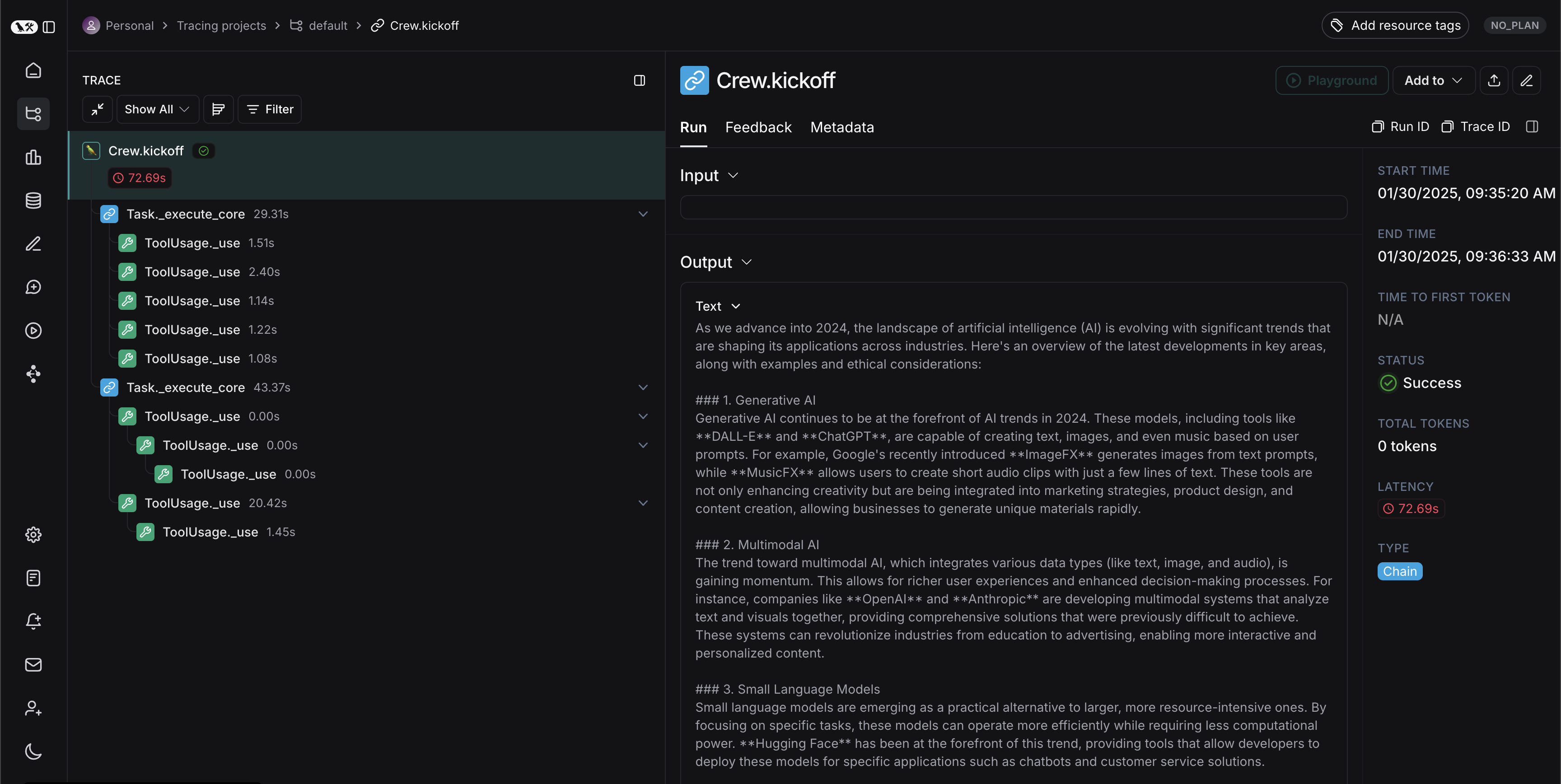

使用 Arize SDK 进行跟踪

使用 Arize SDK 和 OpenTelemetry,您可以将来自多个其他框架的跟踪记录到 LangSmith。 以下是将 CrewAI 追踪到 LangSmith 的示例,您可以找到支持的完整列表 框架。制作此示例 使用其他框架时,您只需更改 instrumentor 以匹配框架。

1. 安装

首先,安装所需的软件包:

pip install -qU arize-phoenix-otel openinference-instrumentation-crewai crewai crewai-tools

2. 配置您的环境

接下来,设置以下环境变量:

OPENAI_API_KEY=<your_openai_api_key>

SERPER_API_KEY=<your_serper_api_key>

3. 设置仪器

在运行任何应用程序代码之前,让我们设置我们的 instrumentor(您可以将其替换为此处支持的任何框架)

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

# Add LangSmith API Key for tracing

LANGSMITH_API_KEY = "YOUR_API_KEY"

# Set the endpoint for OTEL collection

ENDPOINT = "https://api.smith.langchain.com/otel/v1/traces"

# Select the project to trace to

LANGSMITH_PROJECT = "YOUR_PROJECT_NAME"

# Create the OTLP exporter

otlp_exporter = OTLPSpanExporter(

endpoint=ENDPOINT,

headers={"x-api-key": LANGSMITH_API_KEY, "Langsmith-Project": LANGSMITH_PROJECT}

)

# Set up the trace provider

provider = TracerProvider()

processor = BatchSpanProcessor(otlp_exporter)

provider.add_span_processor(processor)

# Now instrument CrewAI

from openinference.instrumentation.crewai import CrewAIInstrumentor

CrewAIInstrumentor().instrument(tracer_provider=provider)

4. 记录跟踪

现在,您可以运行 CrewAI 工作流,跟踪将自动记录到 LangSmith 中

from crewai import Agent, Task, Crew, Process

from crewai_tools import SerperDevTool

search_tool = SerperDevTool()

# Define your agents with roles and goals

researcher = Agent(

role='Senior Research Analyst',

goal='Uncover cutting-edge developments in AI and data science',

backstory="""You work at a leading tech think tank.

Your expertise lies in identifying emerging trends.

You have a knack for dissecting complex data and presenting actionable insights.""",

verbose=True,

allow_delegation=False,

# You can pass an optional llm attribute specifying what model you wanna use.

# llm=ChatOpenAI(model_name="gpt-3.5", temperature=0.7),

tools=[search_tool]

)

writer = Agent(

role='Tech Content Strategist',

goal='Craft compelling content on tech advancements',

backstory="""You are a renowned Content Strategist, known for your insightful and engaging articles.

You transform complex concepts into compelling narratives.""",

verbose=True,

allow_delegation=True

)

# Create tasks for your agents

task1 = Task(

description="""Conduct a comprehensive analysis of the latest advancements in AI in 2024.

Identify key trends, breakthrough technologies, and potential industry impacts.""",

expected_output="Full analysis report in bullet points",

agent=researcher

)

task2 = Task(

description="""Using the insights provided, develop an engaging blog

post that highlights the most significant AI advancements.

Your post should be informative yet accessible, catering to a tech-savvy audience.

Make it sound cool, avoid complex words so it doesn't sound like AI.""",

expected_output="Full blog post of at least 4 paragraphs",

agent=writer

)

# Instantiate your crew with a sequential process

crew = Crew(

agents=[researcher, writer],

tasks=[task1, task2],

verbose= False,

process = Process.sequential

)

# Get your crew to work!

result = crew.kickoff()

print("######################")

print(result)

您应该会在 LangSmith 项目中看到如下所示的跟踪: